When I began my programming career, Visual Basic (version 3.0) was just emerging as a popular alternative for creating Windows-based applications. Ironically, it was a component-based approach to development that brought forth an army of programmers developing third-party components. Programmers could quickly spin up applications by simply dragging and dropping components onto a form design surface and then wiring everything together with events.

Visual Basic grew in popularity and for a few wonderful years, it was the hot new kid on the block. It brought about a sort of programming renaissance and lowered the barrier to entry for many up and coming developers.

Of course, the reign of Visual Basic was not meant to last. No programming language (or platform for that matter) stays golden forever. In 2002, Microsoft launched the object-oriented successor to Visual Basic called VB.NET. I can remember being so excited when it was released and I dove head first into learning all about the new Framework.

I have a confession to make. In all my excitement over the introduction of the .NET Framework, I hurriedly convinced one of my clients to let me rewrite one of his VB applications using VB.NET. Unfortunately, I made a mistake that many developers at that time did. I assumed that rewriting an application like this would be a simple conversion process. That I would just go in and simply swap one set of code for another and magically everything would work wonderfully.

That did not happen. In fact, the application I rewrote started to fall down in production almost immediately. It experienced huge performance problems. In short, it was a disaster. I had actually taken something that was working quite well and turned it into soup.

The problem was that VB and VB.NET were so fundamentally different. Design patterns that worked well in VB, failed miserably in VB.NET.

What I should have done was to start slowly. Rather than try to convert an existing VB application to using the new .NET Framework, I should have looked at what I needed to do to make the .NET application perform well from the very beginning. I should have learned about best practices and really understood how different the new object-oriented approach to application design would be.

Eventually, I did do all those things, and also switched to using C# (which is much better imho), but only after I messed up my poor clients original VB application. Live and learn, I say. No one is perfect and we all make mistakes. The trick is to learn from them.

I feel like I have learned from mine. But the funny thing is that I can see a familiar pattern emerging now in the world of Salesforce development. I can see how the introduction of the Lightning framework will bring about some of the same challenges for Salesforce developers transitioning from Visualforce as there was for Microsoft developers transitioning from VB to .NET.

Some VB developers transitioning to .NET were never able to make the transition successfully and their careers suffered as a result. I imagine this might happen in the Salesforce world too and there will be Visualforce developers that stay Visualforce developers.

Of course, Visualforce is not going away anytime soon. But, anyone that believes it will not go away eventually is just kidding themselves. Salesforce is firmly committed to Lightning and it is no doubt the future of Salesforce development.

So, what should you do if you are a Salesforce developer that knows a lot about Visualforce?

You should embrace the new Lightning development framework and seek to understand how it differs from traditional Visualforce development. It really is like comparing apples and oranges. Begin by checking out this excellent Trail called Applying Visualforce Skills to Lightning. It helps to warn you about some of potential pitfalls you may encounter.

Just like with VB and .NET, design patterns that worked well in Visualforce, will fail in the world of Lightning. You could easily make an application perform worse if you just attempt to swap one set of code for another.

And so this is a good time for me to tell you about a new course I just started to design for Pluralsight. It will be titled Lightning Component Development Best Practices and I hope to release it before the end of the year.

In the meantime, you can also check out my latest course on Pluralsight titled, Customizing Salesforce with Lightning Components. I have an entire module dedicated to Working with Lightning Data Service and using Base Lightning Components, which are definitely best practices Lightning developers should be using now.

And stay tuned because I will be covering many best practice topics (such as this one about Conditional Rendering in Lightning) on this blog in the months to come.

Released yesterday is a new trail titled, “

Released yesterday is a new trail titled, “

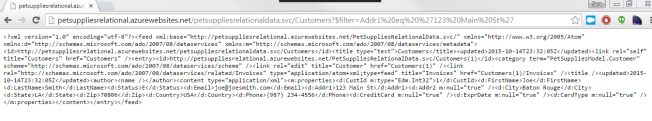

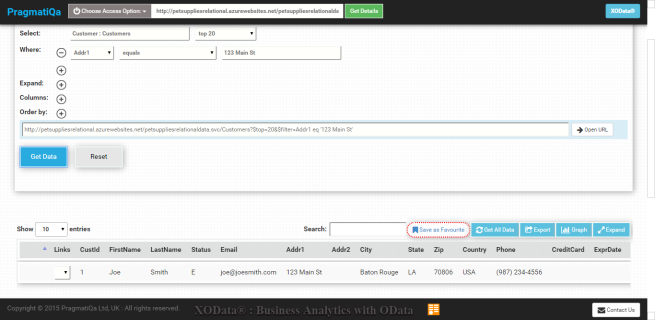

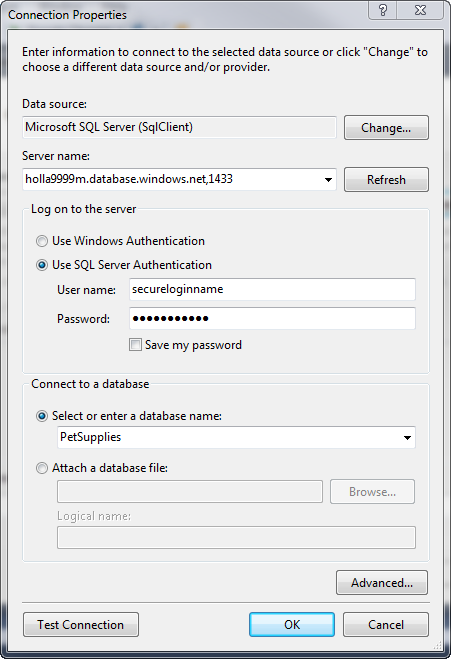

relationships using Lightning Connect. The talk was based on an article I wrote for DeveloperForce earlier this year titled, “

relationships using Lightning Connect. The talk was based on an article I wrote for DeveloperForce earlier this year titled, “